publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

- Superposition as Lossy Compression — Measure with Sparse Autoencoders and Connect to Adversarial VulnerabilityLeonard F. Bereska, Zoe Tzifa-Kratira, Reza Samavi, and Stratis GavvesTMLR, Aug 2025

Neural networks achieve remarkable performance through superposition: encoding multiple features as overlapping directions in activation space rather than dedicating individual neurons to each feature. This phenomenon challenges interpretability; when neurons respond to multiple unrelated concepts, understanding network behavior becomes difficult. Yet despite its importance, we lack principled methods to measure superposition. We present an information-theoretic framework measuring a neural representation’s effective degrees of freedom. We apply Shannon entropy to sparse autoencoder activations to compute the number of effective features as the minimum number of neurons needed for interference-free encoding. Equivalently, this measures how many "virtual neurons" the network simulates through superposition. When networks encode more effective features than they have actual neurons, they must accept interference as the price of compression. Our metric strongly correlates with ground truth in toy models, detects minimal superposition in algorithmic tasks (effective features approximately equal neurons), and reveals systematic reduction under dropout. Layer-wise patterns of effective features mirror studies of intrinsic dimensionality on Pythia-70M. The metric also captures developmental dynamics, detecting sharp feature consolidation during the grokking phase transition. Surprisingly, adversarial training can increase effective features while improving robustness, contradicting the hypothesis that superposition causes vulnerability. Instead, the effect of adversarial training on superposition depends on task complexity and network capacity; simple tasks with ample capacity allow feature expansion (abundance regime), while complex tasks or limited capacity force feature reduction (scarcity regime). By defining superposition as lossy compression, this work enables principled, practical measurement of how neural networks organize information under computational constraints, in particular, connecting superposition to adversarial robustness.

@article{bereska_superposition_2025, title = {Superposition as Lossy Compression --- Measure with Sparse Autoencoders and Connect to Adversarial Vulnerability}, author = {Bereska, Leonard F. and Tzifa-Kratira, Zoe and Samavi, Reza and Gavves, Stratis}, year = {2025}, month = aug, journal = {TMLR}, }

2024

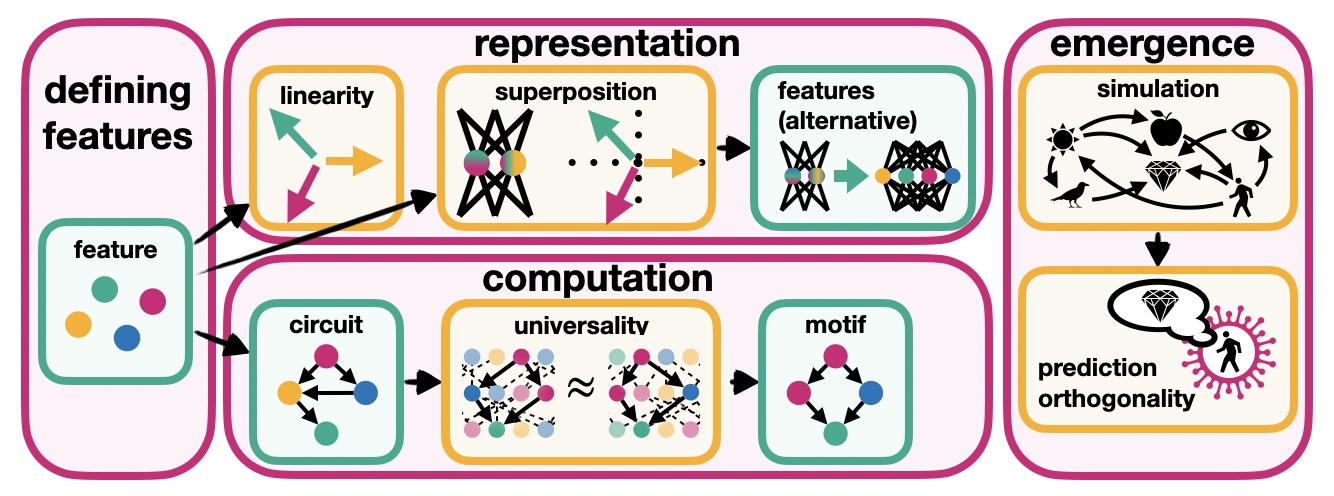

- Mechanistic Interpretability for AI Safety — A ReviewLeonard F. Bereska, and Efstratios GavvesTMLR, Apr 2024

Understanding AI systems’ inner workings is critical for ensuring value alignment and safety. This review explores mechanistic interpretability: reverse-engineering the computational mechanisms and representations learned by neural networks into human-understandable algorithms and concepts to provide a granular, causal understanding. We establish foundational concepts such as features encoding knowledge within neural activations and hypotheses about their representation and computation. We survey methodologies for causally dissecting model behaviors and assess the relevance of mechanistic interpretability to AI safety. We investigate challenges surrounding scalability, automation, and comprehensive interpretation. We advocate for clarifying concepts, setting standards, and scaling techniques to handle complex models and behaviors and expand to domains such as vision and reinforcement learning. Mechanistic interpretability could help prevent catastrophic outcomes as AI systems become more powerful and inscrutable.

@article{bereska_mechanistic_2024, title = {Mechanistic Interpretability for AI Safety — A Review}, author = {Bereska, Leonard F. and Gavves, Efstratios}, year = {2024}, month = apr, journal = {TMLR}, eprint = {2404.14082}, }

2023

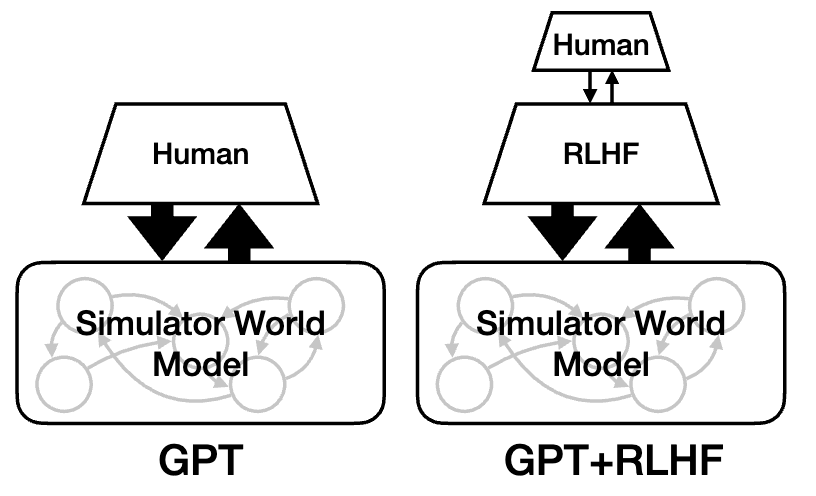

- Taming Simulators: Challenges, Pathways and Vision for the Alignment of Large Language ModelsLeonard F. Bereska, and Efstratios GavvesAAAI-SS, Oct 2023

As AI systems continue to advance in power and prevalence, ensuring alignment between humans and AI is crucial to prevent catastrophic outcomes. The greater the capabilities and generality of an AI system, combined with its development of goals and agency, the higher the risks associated with misalignment. While the concept of superhuman artificial general intelligence is still speculative, language models show indications of generality that could extend to generally capable systems. Regarding agency, this paper emphasizes the understanding of prediction-trained models as simulators rather than agents. Nonetheless, agents may emerge accidentally from internal processes, so-called simulacra, or deliberately through fine-tuning with reinforcement learning. As a result, the focus of alignment research shifts towards aligning simulacra, comprehending and mitigating mesa-optimization, and aligning agents derived from prediction-trained models. The paper outlines the challenges of aligning simulators and presents research directions based on this understanding. Additionally, it envisions a future where aligned simulators are critical in fostering successful human-AI collaboration. This vision encompasses exploring emulation approaches and the integration of simulators into cyborg systems to enhance human cognitive abilities. By acknowledging the risks associated with misaligned AI, delving into the concept of simulacra, and presenting strategies for aligning agents and simulacra, this paper contributes to the ongoing efforts to safeguard human values in developing and deploying AI systems.

@article{bereska_taming_2023, title = {Taming Simulators: Challenges, Pathways and Vision for the Alignment of Large Language Models}, shorttitle = {Taming Simulators}, author = {Bereska, Leonard F. and Gavves, Efstratios}, year = {2023}, month = oct, journal = {AAAI-SS}, volume = {1}, number = {1}, pages = {68--72}, issn = {2994-4317}, doi = {10.1609/aaaiss.v1i1.27478}, }

2022

- Continual Learning of Dynamical Systems With Competitive Federated Reservoir ComputingLeonard F. Bereska, and Efstratios GavvesProceedings of The 1st Conference on Lifelong Learning Agents, Nov 2022

Machine learning recently proved efficient in learning differential equations and dynamical systems from data. However, the data is commonly assumed to originate from a single never-changing system. In contrast, when modeling real-world dynamical processes, the data distribution often shifts due to changes in the underlying system dynamics. Continual learning of these processes aims to rapidly adapt to abrupt system changes without forgetting previous dynamical regimes. This work proposes an approach to continual learning based on reservoir computing, a state-of-the-art method for training recurrent neural networks on complex spatiotemporal dynamical systems. Reservoir computing fixes the recurrent network weights - hence these cannot be forgotten - and only updates linear projection heads to the output. We propose to train multiple competitive prediction heads concurrently. Inspired by neuroscience’s predictive coding, only the most predictive heads activate, laterally inhibiting and thus protecting the inactive heads from forgetting induced by interfering parameter updates. We show that this multi-head reservoir minimizes interference and catastrophic forgetting on several dynamical systems, including the Van-der-Pol oscillator, the chaotic Lorenz attractor, and the high-dimensional Lorenz-96 weather model. Our results suggest that reservoir computing is a promising candidate framework for the continual learning of dynamical systems. We provide our code for data generation, method, and comparisons at }url{https://github.com/leonardbereska/multiheadreservoir.}

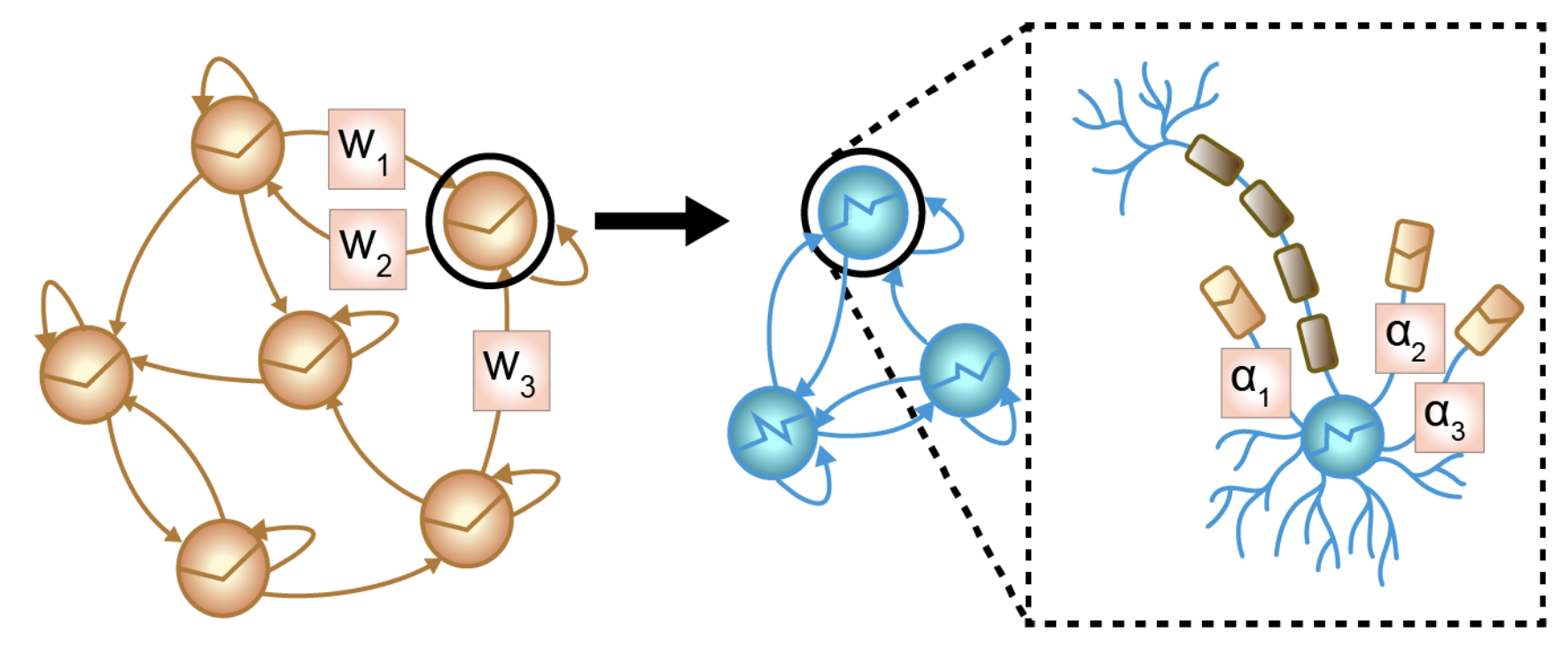

@article{bereska_continual_2022, title = {Continual Learning of Dynamical Systems With Competitive Federated Reservoir Computing}, author = {Bereska, Leonard F. and Gavves, Efstratios}, year = {2022}, month = nov, journal = {Proceedings of The 1st Conference on Lifelong Learning Agents}, pages = {335--350}, issn = {2640-3498}, urldate = {2024-07-03}, } - Tractable Dendritic RNNs for Reconstructing Nonlinear Dynamical SystemsManuel Brenner, Florian Hess, Jonas M. Mikhaeil, Leonard F. Bereska , and 3 more authorsProceedings of the 39th International Conference on Machine Learning, Jun 2022

In many scientific disciplines, we are interested in inferring the nonlinear dynamical system underlying a set of observed time series, a challenging task in the face of chaotic behavior and noise. Previous deep learning approaches toward this goal often suffered from a lack of interpretability and tractability. In particular, the high-dimensional latent spaces often required for a faithful embedding, even when the underlying dynamics lives on a lower-dimensional manifold, can hamper theoretical analysis. Motivated by the emerging principles of dendritic computation, we augment a dynamically interpretable and mathematically tractable piecewise-linear (PL) recurrent neural network (RNN) by a linear spline basis expansion. We show that this approach retains all the theoretically appealing properties of the simple PLRNN, yet boosts its capacity for approximating arbitrary nonlinear dynamical systems in comparatively low dimensions. We employ two frameworks for training the system, one combining BPTT with teacher forcing, and another based on fast and scalable variational inference. We show that the dendritically expanded PLRNN achieves better reconstructions with fewer parameters and dimensions on various dynamical systems benchmarks and compares favorably to other methods, while retaining a tractable and interpretable structure.

@article{brenner_tractable_2022, title = {Tractable Dendritic RNNs for Reconstructing Nonlinear Dynamical Systems}, author = {Brenner, Manuel and Hess, Florian and Mikhaeil, Jonas M. and Bereska, Leonard F. and Monfared, Zahra and Kuo, Po-Chen and Durstewitz, Daniel}, year = {2022}, month = jun, journal = {Proceedings of the 39th International Conference on Machine Learning}, pages = {2292--2320}, issn = {2640-3498}, }

2019

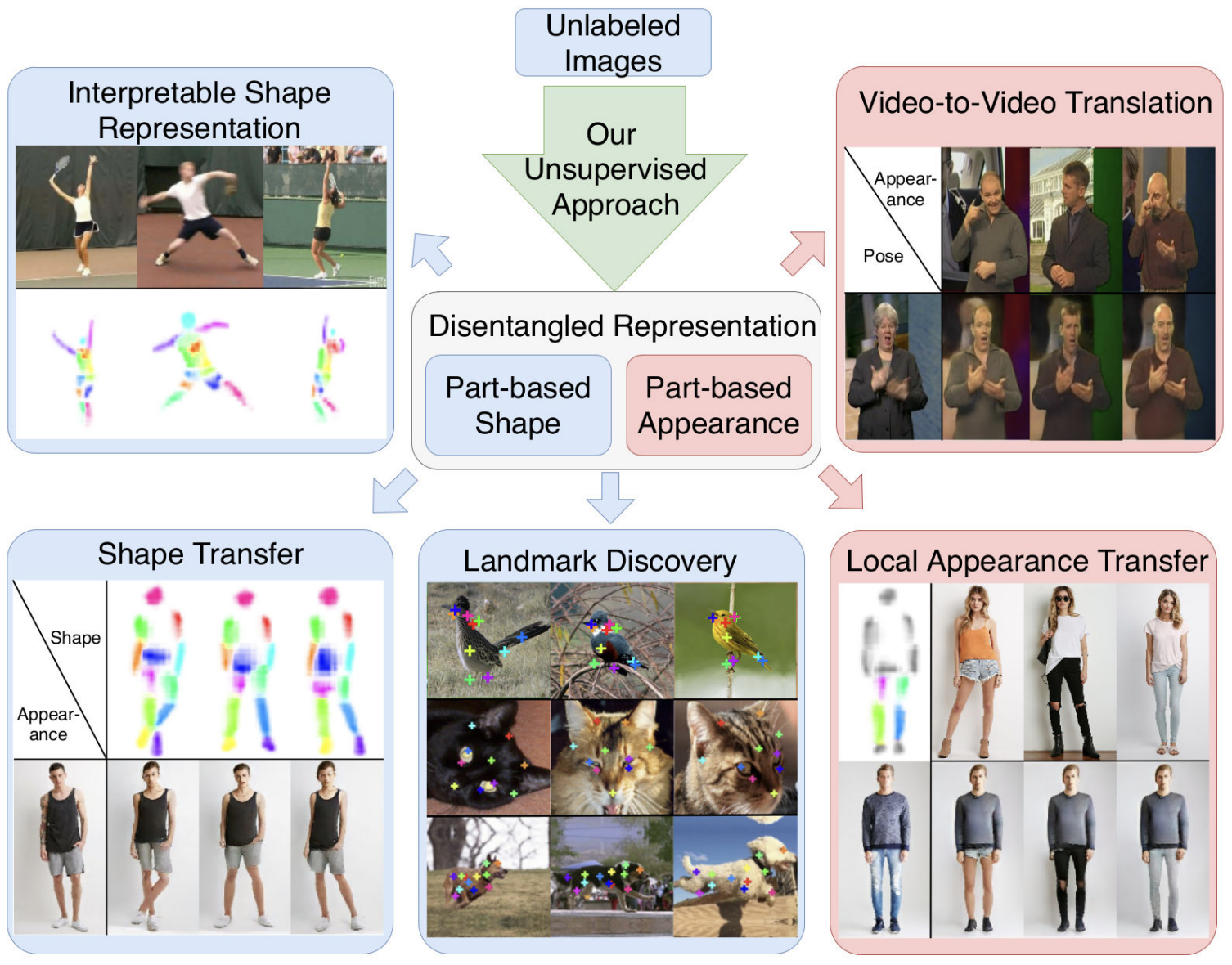

- Unsupervised Part-Based Disentangling of Object Shape and AppearanceDominik Lorenz, Leonard F. Bereska, Timo Milbich, and Bjorn OmmerProceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Jun 2019

Large intra-class variation is the result of changes in multiple object characteristics. Images, however, only show the superposition of different variable factors such as appearance or shape. Therefore, learning to disentangle and represent these different characteristics poses a great challenge, especially in the unsupervised case. Moreover, large object articulation calls for a flexible part-based model. We present an unsupervised approach for disentangling appearance and shape by learning parts consistently over all instances of a category. Our model for learning an object representation is trained by simultaneously exploiting invariance and equivariance constraints between synthetically transformed images. Since no part annotation or prior information on an object class is required, the approach is applicable to arbitrary classes. We evaluate our approach on a wide range of object categories and diverse tasks including pose prediction, disentangled image synthesis, and video-to-video translation. The approach outperforms the state-of-the-art on unsupervised keypoint prediction and compares favorably even against supervised approaches on the task of shape and appearance transfer.

@article{lorenz_unsupervised_2019, title = {Unsupervised Part-Based Disentangling of Object Shape and Appearance}, author = {Lorenz, Dominik and Bereska, Leonard F. and Milbich, Timo and Ommer, Bjorn}, year = {2019}, journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, pages = {10955--10964}, urldate = {2024-07-03}, }