Leonard F. Bereska

AI Safety Researcher | PhD Candidate at the University of Amsterdam

4.125, LAB42

Science Park 900

1098XH Amsterdam

I’m Leonard, a PhD candidate at the University of Amsterdam working to make AI go well for humans. My research has migrated from the internals of neural networks toward bigger questions about what happens when increasingly capable AI systems interact with human institutions at scale.

research focus

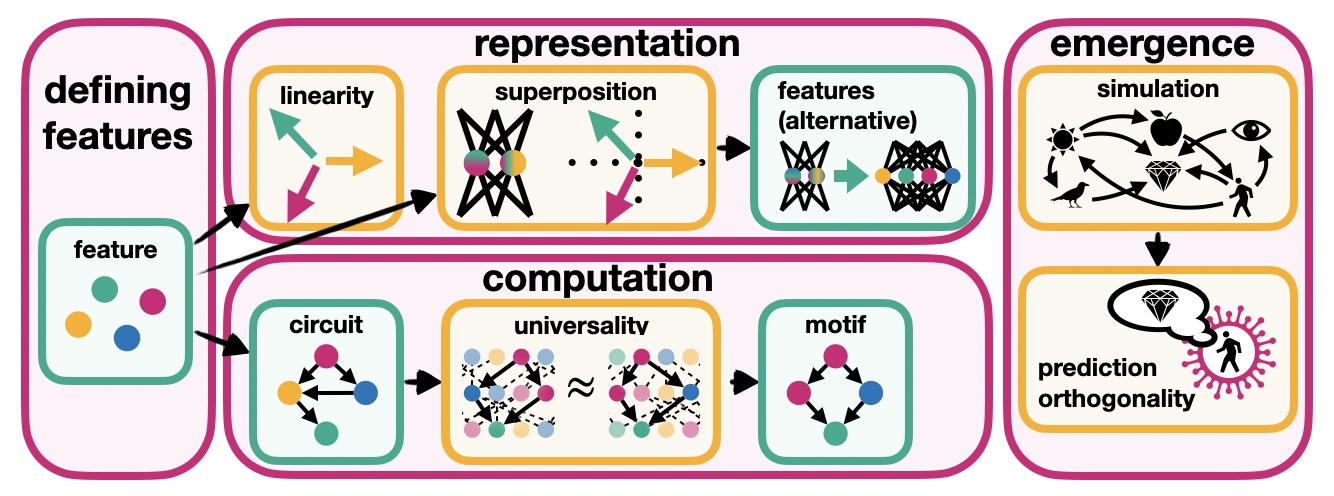

I started in mechanistic interpretability, reverse-engineering how neural networks compress and represent information. That work led to a a review paper documenting foundations for the field. When I became interested in its connection to adversarial robustness, I had to formalize and measure superposition as a prerequisite. But (maybe prompted by recent developments in the world) recently I became interested in what happens outside the model: how do AI systems reshape the humans and institutions that deploy them? How can AI be a force for freedom?

I’m now developing theoretical foundations for understanding human agency in an AI agency-saturated world. The core intuition: genuine agency requires being predictable to yourself and unpredictable to others. I am excited about how this connects thermodynamics, active inference, and political philosophy. More on this soon.

I supervised Master’s students on projects ranging from bias detection and truthfulness in LLMs to interpretability in medical AI.

community building

In 2023, I co-founded the AI Safety Initiative Amsterdam (AISA) with colleagues who shared the sense that the “ChatGPT moment” demanded action. We’ve organized technical talks, panel discussions with OpenAI researchers and UvA professors, and facilitated reading groups bringing new researchers into the field. AISA is now run by the next generation of AI safety researchers and thriving.

I’m now working to establish an AI Safety Hub in Amsterdam, aiming to build continental Europe’s premier AI safety hub. This involves navigating the question of how safety research can benefit from proximity to industry without being captured by it.

beyond research

When I’m not thinking about neural networks or institutional dynamics:

- Practicing yoga and meditation

- Reading science fiction (Vernor Vinge for plausible singularity scenarios, Ted Chiang for everything else)

- learning languages, (treating language acquisition as a window into cognition), currently learning Spanish

- Playing with my two chihuahuas, Cicchetti and Pancetta

- Building my digital fortress against surveillance capitalism